Would you fill your brand-new Ferrari with cheap and inferior fuel? It’s a question posed by Martin Bailey in his new guide: ‘Full Speed Ahead – how to make variable data PDF files that won’t slow your digital press’. It’s an analogy he uses to explain the importance of putting well-constructed PDF files through your DFE so that they don’t disrupt the printing process and the DFE runs as efficiently as possible.

Here are Martin’s recommendations to help you avoid making jobs that delay the printing process, so you can be assured that you’ll meet your print deadline reliably and achieve your printing goals effectively:

If you’re printing work that doesn’t make use of variable data on a digital press, you’re probably producing short runs. If you weren’t, you’d be more likely to choose an offset or flexo press instead. But “short runs” very rarely means a single copy.

Let’s assume that you’re printing, for example, 50 copies of a series of booklets, or of an imposed form of labels. In this case the DFE on your digital press only needs to RIP each PDF page once.

To continue the example, let’s assume that you’re printing on a press that can produce 100 pages per minute (or the equivalent area for labels etc.). If all your jobs are 50 copies long, you therefore need to RIP jobs at only two pages per minute (100ppm/50 copies). Once a job is fully RIPped and the copies are running on press you have plenty of time to get the next job prepared before the current one clears the press.

But VDP jobs place additional demands on the processing power available in a DFE because most pages are different to every other page and must therefore each be RIPped separately. If you’re printing at 100 pages per minute the DFE must RIP at 100 pages per minute; fifty times faster than it needed to process for fifty copies of a static job.

Each minor inefficiency in a VDP job will often only add between a few milliseconds and a second or two to the processing of each page, but those times need to be multiplied up by the number of pages in the job. An individual delay of half a second on every page of a 10,000-page job adds up to around an hour and a half for the whole job. For a really big job of a million pages it only takes an extra tenth of a second per page to add 24 hours to the total processing time.

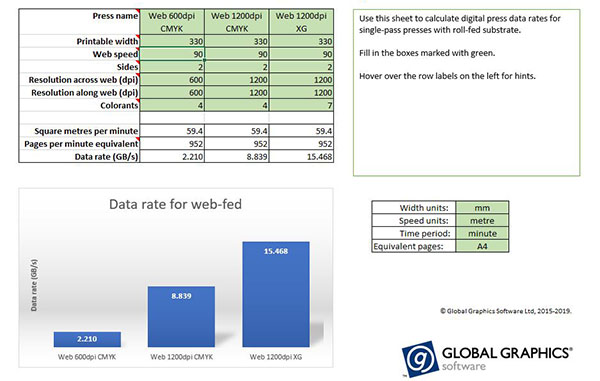

If you’re printing at 120ppm the DFE must process each page in an average of half a second or less to keep up with the press. The fastest continuous feed inkjet presses at the time of writing are capable of printing an area equivalent to over 13,000 pages per minute, which means each page must be processed in just over 4ms. It doesn’t take much of a slow-down to start impacting throughput.

If you’re involved in this kind of calculation you may find the digital press data rate calculator useful: Download the data rate calculator

This extra load has led DFE builders to develop a variety of optimizations. Most of these work by reducing the amount of data that must be RIPped. But even with those optimizations a complex VDP job typically requires significantly more processing power than a ‘static’ job where every copy is the same.

The amount of processing required to prepare a PDF file for print in a DFE can vary hugely without affecting the visual appearance of the printed result, depending on how it is constructed.

Poorly constructed PDF files can therefore impact a print service provider in one or both of two ways:

- Output is not achieved at engine speed, reducing return on investment (ROI) because fewer jobs can be produced per shift. In extreme cases when printing on a continuous feed (web-fed) press a failure to deliver rasters for printing fast enough can also lead to media wastage and may confuse in-line or near-line finishing.

- In order to compensate for jobs that take longer to process in the DFE, press vendors often provide more hardware to expand the processing capability, increasing the bill of materials, and therefore the capital cost of the DFE.

Once the press is installed and running the production manager will usually calculate and tune their understanding of how many jobs of what type can be printed in a shift. Customer services representatives work to ensure that customer expectations are set appropriately, and the company falls into a regular pattern. Most jobs are quoted on an acceptable turn-round time and delivered on schedule.

Depending on how many presses the print site has, and how they are connected to one or more DFEs this may lead to a press sitting idle, waiting for pages to print. It may also delay other jobs in the queue or mean that they must be moved to a different press. Moving jobs at the last minute may not be easy if the presses available are not identical. Different presses may require different print streams or imposition and there may be limitations on stock availability, etc.

Many jobs have tight deadlines on delivery schedules; they may need to be ready for a specific time, with penalties for late delivery, or the potential for reduced return for the marketing department behind a direct mail campaign. Brand owners may be ordering labels or cartons on a just in time (JIT) plan, and there may be consequences for late delivery ranging from an annoyed customer to penalty clauses being invoked.

Those problems for the print service provider percolate upstream to brand owners and other groups commissioning digital print. Producing an inefficiently constructed PDF file will increase the risk that your job will not be delivered by the expected time.

You shouldn’t take these recommendations as suggesting that the DFE on any press is inadequate. Think of it as the equivalent of a suggestion that you should not fill your brand-new Ferrari with cheap and inferior fuel!

The above is an excerpt from Full Speed Ahead: how to make variable data PDF files that won’t slow your digital press. The guide is designed to help you avoid making jobs that disrupt and delay the printing process, increasing the probability of everyone involved in delivering the printed piece; hitting their deadlines reliably and achieving their goals effectively.

To be the first to receive our blog posts, news updates and product news why not subscribe to our monthly newsletter? Subscribe here

About the author:

Martin Bailey first joined what has now become Global Graphics Software in the early nineties, and has worked in customer support, development and product management for the Harlequin RIP as well as becoming the company’s Chief Technology Officer. During that time he’s also been actively involved in a number of print-related standards activities, including chairing CIP4, CGATS and the ISO PDF/X committee. He’s currently the primary UK expert to the ISO committees maintaining and developing PDF and PDF/VT.

To be the first to receive our blog posts, news updates and product news why not subscribe to our monthly newsletter? Subscribe here